A Strong Emphasis on Minimizing Data Duplicity

Overall, the trend of minimizing data movement aligns with the need for cost optimization, data efficiency, and streamlined data workflows in modern data architectures on cloud platforms. By leveraging the appropriate zone and layering strategies, organizations can achieve these benefits and optimize their data processing pipelines.

Zones (OneLake data or delta lake) are another advancement in data processing layers that refers to logical partitions or containers within a data lake or data storage system. They provide segregation and organization of data based on different criteria, such as data source, data type, or data ownership. Microsoft Fabric supports the use of OneLake and delta lakes as storage mechanisms for efficiently managing data zones.

By organizing data into different zones or layers based on its processing status and level of refinement, organizations can limit data movement to only when necessary. The concept of zones, such as landing zones, bronze/silver/gold layers, or trusted zones, allows for incremental data processing and refinement without requiring data to be moved between different storage locations, as well as effective management of data governance and security.

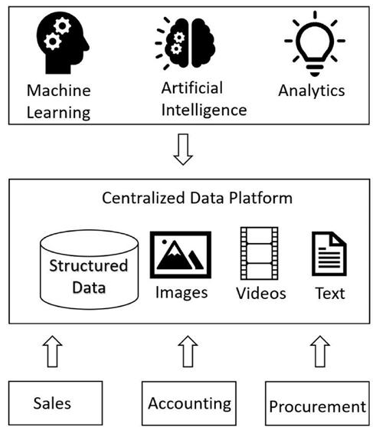

With the advancement of data architecture on cloud platforms, there is a growing emphasis on minimizing data movement. This approach aims to optimize costs and enhance speed in data processing, delivery, and presentation. Cloud platforms like Databricks and the newly introduced Microsoft Fabric support the concept of a unified data lake platform to achieve these goals.

By utilizing a single shared compute layer across services, such as Azure Synapse Analytics, Azure Data Factory, and Power BI, the recently introduced Microsoft Fabric (in preview at the time of writing this book) enables efficient utilization of computational resources. This shared compute layer eliminates the need to move data between different layers or services, reducing costs associated with data replication and transfer.

Furthermore, Microsoft Fabric introduces the concept of linked data sources, allowing the platform to reference data stored in multiple locations, such as Amazon S3, Google Cloud Storage, local servers, or Teams. This capability enables seamless access to data across different platforms as if they were all part of a single data platform. It eliminates the need for copying data from one layer to another, streamlining data orchestration, ETL processes, and pipelines.

Modern data orchestration relies on multiple concepts that work together in data integration to facilitate the acquisition, processing, storage, and delivery of data. Some of the key concepts depend on understanding ETL, ELT, data pipelines, and workflows. Before delving deeper into data integration and data pipelines, let’s explore these major concepts, their origins, and their current usage:

• ETL and ELT are data integration approaches where ETL involves extracting data, transforming it, and then loading it into a target system, while ELT involves extracting data, loading it into a target system, and then performing transformations within the target system. ETL gained popularity in the early days of data integration for data warehousing and business intelligence, but it faced challenges with scalability and real-time processing. ELT emerged as a response to these challenges, leveraging distributed processing frameworks and cloud-based data repositories. Modern data integration platforms and services offer both ETL and ELT capabilities, and hybrid approaches combining elements of both are also common.

• Data pipeline refers to a sequence of steps that move and process data from source to target systems. It includes data extraction, transformation, and loading, and can involve various components and technologies, such as batch processing or stream processing frameworks. Data pipelines ensure the smooth flow of data and enable real-time or near-real-time processing.

• Workflow is a term used to describe the sequence of tasks or actions involved in a data integration or processing process. It defines the logical order in which the steps of a data pipeline or data integration process are executed. Workflows can be designed using visual interfaces or programming languages, and they help automate and manage complex data integration processes. Workflows can include data transformations, dependencies, error handling, and scheduling to ensure the efficient execution of the data integration tasks.

In summary, modern data orchestration encompasses data integration, pipelines, event-driven architectures, stream processing, cloud-based solutions, automation, and data governance. It emphasizes real-time processing, scalability, data quality, and automation to enable organizations to leverage their data assets effectively for insights, decision-making, and business outcomes, letting us understand it better through diving into data integration, data pipelines, ETL, supporting tools, and use cases.