Data Orchestration Concepts

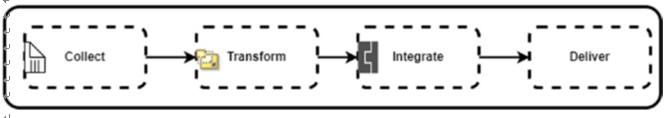

Data orchestration techniques are used to manage and coordinate the flow of data across various systems, applications, and processes within an organization. These techniques help ensure that data is collected, transformed, integrated, and delivered to the right place at the right time (Figure 5-1).

Figure 5-1. Some of the data engineering activities where orchestration is required

Data orchestration often involves data integration and extract, transform, load (ETL) processes. Data integration refers to combining data from different sources into a unified view, while ETL involves extracting data from source systems, transforming it to meet the target system’s requirements, and loading it into the destination system.

Orchestration has evolved alongside advancements in data management technologies and the increasing complexity of data ecosystems. Traditional approaches involved manual and ad-hoc methods of data integration, which were time-consuming and error-prone. As organizations started adopting distributed systems, cloud computing, and Big Data technologies, the need for automated and scalable data orchestration techniques became evident.

In the early days, batch processing was a common data orchestration technique. Data was collected over a period, stored in files or databases, and processed periodically. Batch processing is suitable for scenarios where real-time data processing is not necessary, and it is still used in various applications today.

With the rise of distributed systems and the need for real-time data processing, message-oriented middleware (MOM) became popular. MOM systems enable asynchronous communication between different components or applications by sending messages through a middleware layer. This technique decouples the sender and the receiver, allowing for more flexible and scalable data orchestration.

Enterprise Service Bus (ESB) is a software architecture that provides a centralized infrastructure for integrating various applications and services within an enterprise. It facilitates the exchange of data between different systems using standardized interfaces, protocols, and message formats. ESBs offer features like message routing, transformation, and monitoring, making them useful for data orchestration in complex environments.

Modern data orchestration techniques often involve the use of data pipelines and workflow orchestration tools both on cloud and on-premises for batch processing and real-time systems involving event-driven and continuous data streaming. The major areas that it covers are as follows:

• Data Pipelines and Workflow Orchestration: Modern data orchestration techniques often involve the use of data pipelines and workflow orchestration tools. Data pipelines provide a structured way to define and execute a series of data processing steps, including data ingestion, transformation, and delivery. Workflow orchestration tools help coordinate and manage the execution of complex workflows involving multiple tasks, dependencies, and error handling.

• Stream Processing and Event-Driven Architecture: As the demand for real-time data processing and analytics increased, stream processing and event-driven architecture gained prominence. Stream processing involves continuously processing and analyzing data streams in real-time, enabling organizations to derive insights and take immediate action. Event-driven architectures leverage events and event-driven messaging to trigger actions and propagate data changes across systems.

• Cloud-Based Data Orchestration: Cloud computing has greatly influenced data orchestration techniques. Cloud platforms offer various services and tools for data ingestion, storage, processing, and integration. They provide scalable infrastructure, on-demand resources, and managed services, making it easier to implement and scale data orchestration workflows.