Moreover, data pipelines facilitate data integration and consolidation. Organizations often have data spread across multiple systems, databases, and applications. Data pipelines provide a means to efficiently gather, transform, and consolidate data from disparate sources into a unified and consistent format. This integrated view of data allows organizations to derive comprehensive insights and make better-informed decisions based on a holistic understanding of their data.

At its core, a data pipeline consists of the following key components:

• Data sources: The data sources are the places where the data comes from. They can be internal systems, external sources, or a combination of both.

• Data Ingestion: This is the initial stage of the data pipeline where data is collected from its source systems or external providers. It involves extracting data from various sources, such as databases, APIs, files, streaming platforms, or IOT devices. Data ingestion processes should consider factors like data volume, velocity, variety, and quality to ensure the efficient and reliable acquisition of data.

• Data Processing: Once the data is ingested, it goes through various processing steps to transform, clean, and enrich it. This stage involves applying business rules, algorithms, or transformations to manipulate the data into a desired format or structure. Common processing tasks include filtering, aggregating, joining, validating, and normalizing the data. The goal is to prepare the data for further analysis and downstream consumption.

• Data Transformation: In this stage, the processed data is further transformed to meet specific requirements or standards. This may involve converting data types, encoding or decoding data, or performing complex calculations. Data transformation ensures that the data is in a consistent and usable format for subsequent stages or systems. Transformations can be performed using tools, programming languages, or specialized frameworks designed for data manipulation.

• Data Storage: After transformation, the data is stored in a persistent storage system, such as a data warehouse, data lake, or a database. The choice of storage depends on factors such as data volume, latency requirements, querying patterns, and cost considerations. Effective data storage design is crucial for data accessibility, scalability, and security. It often involves considerations like data partitioning, indexing, compression, and backup strategies.

• Data Delivery: The final stage of the data pipeline involves delivering the processed and stored data to the intended recipients or downstream systems. This may include generating reports, populating dashboards, pushing data to business intelligence tools, or providing data to other applications or services via APIs or data feeds. Data delivery should ensure the timely and accurate dissemination of data to support decision-making and enable actionable insights.

Throughout these stages, data pipeline orchestration and workflow management play a critical role. Orchestration involves defining the sequence and dependencies of the different stages and processes within the pipeline. Workflow management tools, such as Apache Airflow or Luigi, facilitate the scheduling, monitoring, and coordination of these processes, ensuring the smooth and efficient execution of the pipeline.

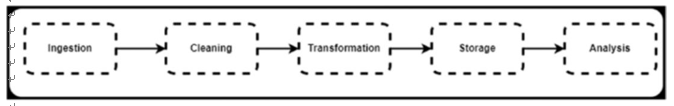

It’s important to note that data pipelines can vary in complexity and scale depending on the organization’s requirements. They can range from simple, linear pipelines with a few stages to complex, branching pipelines with parallel processing and conditional logic. The design and implementation of a data pipeline should be tailored to the specific use case, data sources, processing requirements, and desired outcomes (Figure 5-4).

The stages of a data pipeline are as follows:

• Ingestion: The data is collected from the data sources and loaded into the data pipeline.

• Cleaning: The data is cleaned to remove errors and inconsistencies.

• Transformation: The data is transformed into a format that is useful for analysis.

• Storage: The data is stored in a central location.

• Analysis: The data is analyzed to extract insights.

• Delivery: The data is delivered to users.

Figure 5-4. Steps in a generic data pipeline