Evolution of Data Orchestration

In traditional ETL processes, the primary layers consisted of extraction, transformation, and loading, forming a linear and sequential flow. Data was extracted from source systems, transformed to meet specific requirements, and loaded into a target system or data warehouse.

However, these traditional layers had limited flexibility and scalability. To address these shortcomings, the data staging layer was introduced. This dedicated space allowed for temporary data storage and preparation, enabling data validation, cleansing, and transformation before moving to the next processing stage.

The staging layer’s enhanced data quality provided better error handling and recovery and paved the way for more advanced data processing. As data complexity grew, the need for a dedicated data processing and integration layer emerged. This layer focused on tasks like data transformation, enrichment, aggregation, and integration from multiple sources. It incorporated business rules, complex calculations, and data quality checks, enabling more sophisticated data manipulation and preparation.

With the rise of data warehousing and OLAP technologies, the data processing layers evolved further. The data warehousing and OLAP layer supported multidimensional analysis and faster querying, utilizing optimized structures for analytical processing. These layers facilitated complex reporting and ad-hoc analysis, empowering organizations with valuable insights.

The advent of big data and data lakes introduced a new layer specifically designed for storing and processing massive volumes of structured and unstructured data. Data lakes served as repositories for raw and unprocessed data, facilitating iterative and exploratory data processing. This layer enabled data discovery, experimentation, and analytics on diverse datasets, opening doors to new possibilities.

In modern data processing architectures, multiple refinement stages are often included, such as landing, bronze, silver, and gold layers. Each refinement layer represents a different level of data processing, refinement, and aggregation, adding value to the data and providing varying levels of granularity for different user needs. These refinement layers enable efficient data organization, data governance, and improved performance in downstream analysis, ultimately empowering organizations to extract valuable insights from their data.

The modern data processing architecture has made data orchestration efficient and effective with better speed, security, and governance. Here is the brief on the key impacts of modern data processing architecture on data orchestration and ETL:

• Scalability: The evolution of data processing layers has enhanced the scalability of data orchestration pipelines. The modular structure and specialized layers allow for distributed processing, parallelism, and the ability to handle large volumes of data efficiently.

• Flexibility: Advanced data processing layers provide flexibility in handling diverse data sources, formats, and requirements. The modular design allows for the addition, modification, or removal of specific layers as per changing business needs. This flexibility enables organizations to adapt their ETL pipelines to evolving data landscapes.

• Performance Optimization: With specialized layers, data orchestration pipelines can optimize performance at each stage. The separation of data transformation, integration, aggregation, and refinement allows for parallel execution, selective processing, and efficient resource utilization. It leads to improved data processing speed and reduced time to insight.

• Data Quality and Governance: The inclusion of data staging layers and refinement layers enhances data quality, consistency, and governance. Staging areas allow for data validation and cleansing, reducing the risk of erroneous or incomplete data entering downstream processes. Refinement layers ensure data accuracy, integrity, and adherence to business rules.

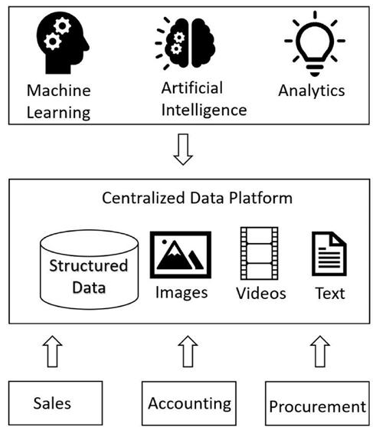

• Advanced Analytics: The availability of data warehousing, OLAP, and Big Data layers enables more advanced analytics capabilities. These layers support complex analytical queries, multidimensional analysis, and integration with machine learning and AI algorithms. They facilitate data-driven decision-making and insight generation.